Public Service AI Trust Model: Toward practical transparency

AI in government is here, but how do we maintain public trust? The Public Service AI Trust Model is a draft tool to support agencies navigating transparency, accountability, and responsible AI adoption.

Introduction

AI in government is here, but how do we maintain public trust? New Zealand's Minister for Digitising Government wants "agencies to adopt AI in ways that are safe, transparent and deliver real value for New Zealanders while upholding the highest standards of trust and accountability."

The challenge for governments world-wide, is to take opportunities to improve efficiency, and enhance public services, while providing transparency and assurance this is being done with all due caution, and with the interests of the public at heart.

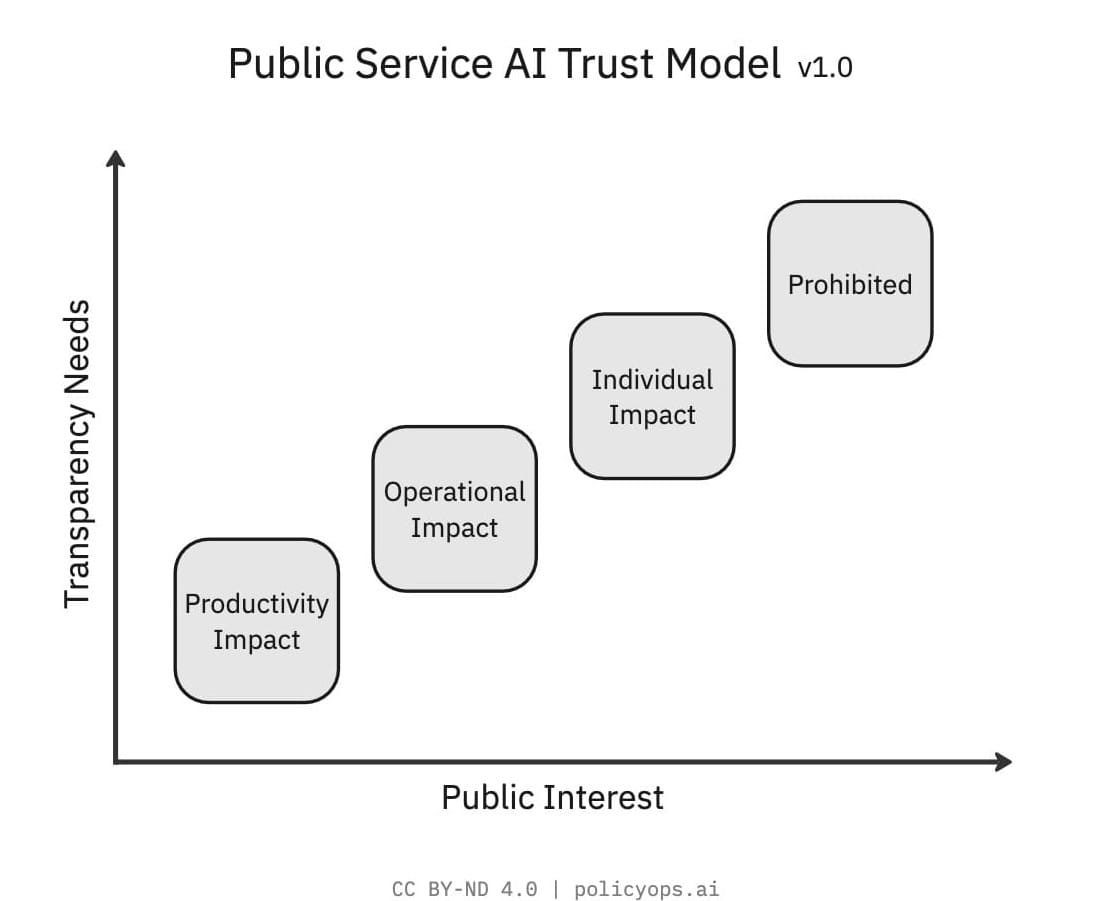

The Public Service AI Trust Model is a tool for communicating within government and to the public. It's offered for discussion as working-version 1.0, under Creative Commons BY-ND 4.0 (free for use without adaptation, with attribution).

The Problem: Trust, Transparency, and Public Interest

Public sentiment towards AI ranges from high enthusiasm to existential fear. The public service's posture towards AI transparency will affect not only trust and confidence in any elected government, but also trust and confidence in the institution of government itself.

Communicating about AI is inherently complex. AI represents a range of rapidly evolving technologies which can be orchestrated in various ways, in various public service contexts. Few have the resources to engage on this topic at a purely technical level. Assurances that New Zealand is adhering to international AI conventions will provide comfort to some, while sparking fears for others.

While we must continue to have important public conversations about technologies and international regulatory considerations, there remains a gap in our ability to talk about what matters to most people about AI, in language that is clear and meaningful.

Introducing the Public Service AI Trust Model

The Public Service AI Trust Model focuses on the impact of AI under four categories.

- Productivity Impact – AI enhancing individual public servant efficiency.

- Operational Impact – AI supporting operational performance and resource allocation.

- Individual Impact – AI directly affecting individual members of the public.

- Prohibited AI – AI that crosses ethical or democratic boundaries.

Model applications

This model may serve as a useful adjunct to existing frameworks and guidance:

- As a heuristic for public service leaders considering current and proposed AI use cases.

- When mapping current and proposed use of AI to public interest and transparency needs.

- As a lens when developing proportionate risk mitigations and governance approaches.

- As a communication tool.

Cross-cutting concerns

Drawing from models such as TOGAF, the model allows for extensibility through the addition of cross-cutting concerns. However, to maintain the integrity and simplicity of the model, v1.0 cross-cutting concerns are constrained to:

-

Privacy – Compliance with privacy laws and public expectations.

-

Security – Data protection, cyber risks, identity and access management, AI model security.

-

Public Value – Reporting of discernible, measurable benefits, in accordance with performance reporting standards set by the Office of the Auditor General.

Why is 'Prohibited' included?

Public interest in government use of AI extends beyond how AI is used to include clarity on areas where its use is not acceptable. The Prohibited category provides an essential boundary condition for social licence. Even in cases where full transparency may not be possible for security reasons, public trust depends on a clear understanding of what use cases are agreed to be 'out of bounds' for government AI adoption.

Potential criticisms of the model

The model does not include numerous important things.

The model does not attempt to cover every issue in AI governance. Instead, it prioritises simplicity – providing a structured way to assess AI’s impact while avoiding terms that may carry different meanings for different audiences. It is designed as a starting point, complementing broader policy and regulatory discussions across other AI framework and guidance components.

Transparency acts as a 'forcing function' for many key concerns, including sound governance and auditability. Public value serves a similar role for fairness and effective technology use. While privacy and security may be shaped by transparency, they are explicitly highlighted due to their broadly recognised public importance.

The model does not highlight the differences between various AI technologies

By focusing on the impact of AI, the model aims to remain relevant throughout ongoing changes to underlying technologies. It also supports public service policy and decision-makers who need to easily contextualise AI initiatives in their unique settings. While the model focuses on AI impacts, public transparency reporting can provide necessary detail for audiences with specific technical interests.

Some AI initiatives may span (or may evolve to span) more than one impact category

Multiple impact categories for a single initiative can be highlighted in public transparency reporting.

The distinction between Productivity Impact and Operational Impact seems academic.

Productivity Impact centres on 'commodity AI', delivered by major platform providers. Operational Impact may involve strategic orchestration of commodity AI components, with or without the addition of bespoke components. Risk profiles and public interest are likely to be qualitatively different, and the opportunity to segment regulatory approaches may be useful.

There are no examples.

Examples are under development using publicly available New Zealand government AI use cases and international examples. If you would like to collaborate, have a similar or related project, or would just like to provide critique or feedback, let's connect.

Public Service AI Trust Model

by

Stuart MacKinnon

is licensed under

CC BY-ND 4.0

• • •

This article was inspired by a recent conversation with Shannon Barlow. If you would like to watch that, or read the transcript, it's available here.

You may also want to read Five Questions for Government Agency Leaders posted recently, which includes trust and transparency as a key consideration.

19 March 2025 Update

I have now extracted a 2024 snapshot of 85 NZ government AI inititives across 18 agencies, in machine readable format (see October NZ Internal Affairs proactive release available here. My thanks to IBM for making their open-source Deep Search Docling AI document processing tools available for this kind of work.

If you would like to collaborate on testing the potential usefulness of the model, or adapting it so it is useful, please reach out.